March 19, 2007 - Characterization Tests Revisited

After I completed the first story, I generated some characterization tests with JUnit Factory.

Before I move on to the next story, I want to revisit those tests and make sure we have not introduced any regressions.

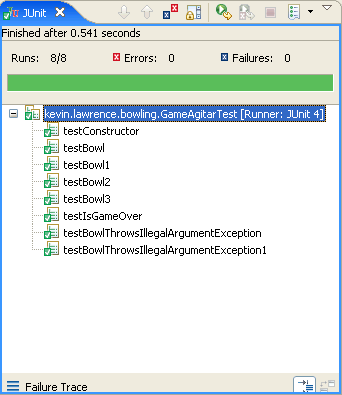

Seeing all of those test pass gives me an additional level of comfort that I have not introduced any regressions but, to be honest, I didn't have much functionality to break when I generated those tests. Now that I have substantially more code, I'll regenerate the tests and see what they tell me.

For a TDD enthusiast such as myself, the idea that someone would just throw away all of their tests and recreate them is close to heresy. A little cost benefit analysis, though, tells me that it is exactly the right thing to do.

On the cost side of the ledger, my investment consisted of a single push of the button ( )

plus 30 seconds waiting for the tests to come back. The return on that investment was a clarification of

a requirement - I discovered that a frame has only 10 pins - and a suite of tests that I can re-run in the

future to make sure I have not broken anything. That's already a significant return for a team that is doing

100% TDD but, for teams who are not quite so diligent, the additional safety net that the generated tests provide

may make the difference between a happy customer and an angry phone call to tech support.

)

plus 30 seconds waiting for the tests to come back. The return on that investment was a clarification of

a requirement - I discovered that a frame has only 10 pins - and a suite of tests that I can re-run in the

future to make sure I have not broken anything. That's already a significant return for a team that is doing

100% TDD but, for teams who are not quite so diligent, the additional safety net that the generated tests provide

may make the difference between a happy customer and an angry phone call to tech support.

To regenerate the tests, I

- select the root of my project in Eclipse and

- click the Generate Tests (

) button

) button

I notice (again) that the Frame allows invalid rolls so I move the pin validation code down from

Game.bowl() to Frame.addBall() and re-run all the tests.

There is a failure that tells me

java.lang.IllegalArgumentException: Invalid pin count - 100

which is just what you'd expect when you add code to validate the pin count. I changed the behavior of a method and the tests reported that the behavior changed. That might seem redundant but I'd be suspicious if a test did not fail when the behavior changed and it's no big deal to regenerate the tests to capture the new behavior.

I scan the generated tests some more and find this howler:

public void testGetCumulativeScore5() throws Throwable {

Frame frame = new Frame(null);

frame.addBall(1);

frame.addBall(10);

int result = frame.getCumulativeScore();

assertEquals("result", 11, result);

}

That's a test that I wish I had in my manual suite, so I copy it over to FrameTest, clean up the names

and change the expected result to something more sensible:

@Test

public void totalForTwoBallsShouldNotExceed10() {

Frame frame = new Frame(null);

frame.addBall(1);

try {

frame.addBall(10);

fail();

}

catch(IllegalArgumentException e) {

assertThat(frame.getCumulativeScore(), is(1));

}

}

Now I change addBall() to reject the invalid ball...

if(firstBall != null && firstBall + pins > 10) {

throw new IllegalArgumentException("Total pin count invalid");

}

The test passes and I regenerate all of my JUnit Factory tests. The new test for addBall() ...

public void testAddBallThrowsIllegalArgumentException() throws Throwable {

Frame frame = new Frame(null);

frame.addBall(1);

try {

frame.addBall(10);

fail("Expected IllegalArgumentException to be thrown");

} catch (IllegalArgumentException ex) {

assertEquals("ex.getMessage()", "Total pin count invalid", ex.getMessage());

assertThrownBy(Frame.class, ex);

assertEquals("frame.firstBall", 1, ((Number) getPrivateField(frame, "firstBall")).intValue());

assertTrue("frame.needsMoreBalls()", frame.needsMoreBalls());

}

}

...is not exactly what I would have written but it's quite comprehensive. Actually, the whole suite of generated

tests is quite comprehensive. When I run them, I get line, branch and condition coverage for all of my code except the

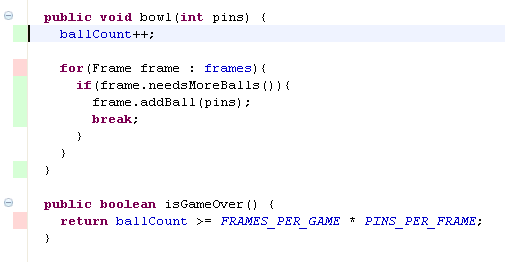

loop condition in Game.bowl() and the Game Over condition in Game.isGameOver():

100% coverage in Frame and 96% in Game is not bad - it's certainly better than no tests at

all - and compares quite favourably with my own manually written tests. My TDD'd tests hit the Game Over condition but

also missed the additional ball after the game is finished.

I have linked the entire suite of generated tests for Game and Frame so you can review them yourself. I am sure you'll agree that they are quite comprehensive.

JUnit Factory was not really designed to be used for TDD. Where it really comes into its own is on that big, steaming pile of legacy code that you just inherited with no tests but, because the tests are so cheap and easy to generate, it's nice to have them around - even for code that you are TDDing. It's a pleasant bonus that JUnit Factory works so well on the kind of well-factored, modular code that usually results from TDD.

Before I move on, I should do a little spring cleaning. I noticed a couple of opportunities for refactoring while

I was reviewing the test results. In Frame, I have the number 10 hard-coded twice when there is a perfectly

good constant PINS_PER_FRAME. I replace it and run the test and the embarrassing failure...

java.lang.IllegalArgumentException: Invalid pin count - 9

...makes me realize that the constant PINS_PER_FRAME should actually have been called

BALLS_PER_FRAME. How come no one noticed that? How come I didn't notice that? My mistake was caught by the TDD

tests, the acceptance tests and, of course, JUnit Factory's characterization tests so no harm was done. I rename the constant in

Game and introduce a new one - Frame.PINS_PER_FRAME.

That variable ballCount is not strictly necessary now that we can ask the last frame if the game is done but I have a hunch it disappear quite soon anyway. On to rule 2.1.3.

Posted by Kevin Lawrence at March 19, 2007 02:25 PM

Trackback Pings

TrackBack URL for this entry:

Comments

Kevin,

I don't fully agree with the following statement:

"JUnit Factory was not really designed to be used for TDD"

I don't think TDD has to equal test-first development. If we agree on this point, JUnitFactory is a perfectly useful tool for the job, unless my design will somehow suffer from relying on it.

Posted by: David Vydra on March 27, 2007 06:58 AM

I think TDD should mean EXACTLY the process described in Kent Beck's book Test Driven Design. If the acronym TDD gets hijacked or wishy-washed down, then we'll need another term for Kent's test/code/refactor process.

My broader point, though, is that JUF might turn out to be useful for a great many purposes - even though it was not specifically designed for that purpose.

Posted by:

Kevin Lawrence ![[TypeKey Profile Page]](../../nav-commenters.gif) on April 2, 2007 04:15 PM

on April 2, 2007 04:15 PM